ICP (Ideal Customer Profile) see foor note 👇

Intellisophic Data Labeling Sales AI SDR

AI SDR ( Artificial Intelligence Sales Development Representative)

🌟 The Goal

- ✅ Find high‑intent leads fast

- ✅ Write cold emails that don’t sound robotic

- ✅ Generate follow‑ups automatically (without being annoying)

- ✅ Handle objections like a closer

- ✅ Book more calls with fewer messages

- ✅ Build a repeatable weekly outbound workflow

No sales team required.

🧠 What Sales Reps Actually Do (And Why AI Wins)

Outbound sales isn’t magic.

It’s a system:

- Define the right ICP

- Build lead lists

- Personalize outreach

- Follow up consistently

- Reply fast and handle objections

- Book the call

- Keep everything organized

Most lead gen fail because they start with “write me a cold email”…

…without knowing who they’re targeting and why those people should care.

So we’ll build this in the right order using Anthropic’s Claude

🚀 Prompt #1 → The ICP Sniper (Target the Right People)

Before you write a single email, you need one thing:

Clarity on who is most likely to buy.

Outbound fails when you message everyone. It works when you message a small group who already have the problem, feel the pain, and want the outcome.

Prompt

You are a B2B sales strategist who builds outbound systems for 7‑figure AI infrastructure companies. My offer: Semantic Data Labeling as a Service (LaaS) for foundation AI teams. Ontology‑driven semantic indexing (50–100+ annotations per document) that reduces training compute by 35–55%, eliminates retraining for semantic updates, and creates reusable semantic assets across model generations. My target market (optional): Foundation model builders, enterprise AI teams, and AI platform companies operating large‑scale models. Task: Define the best ICP for outbound. Deliver: - 3 best customer segments (ranked #1 to #3) For each segment: - Job titles to target - Daily pain points - Urgent triggers that make them buy now - What success looks like - The exact words they use to describe the problem - 5 messaging angles that will get replies Format output as a clear, actionable sales strategy document.

🔎 Prompt #2 → The Lead Finder (Build Lists Without Guessing)

You are my AI outbound assistant. My ICP: Foundation AI teams scaling large models where training costs, retraining frequency, hallucinations, and semantic limits are active problems. Give me: - 20 LinkedIn search queries - 20 Apollo filter attributes - 15 high‑intent buying signals - A lead scoring framework from 1–10 Then create a table of 25 lead examples with: - Company type - Ideal role - Trigger - Best outreach angle

✍️ Prompt #3 → The Cold Email Generator (That Feels Human)

You are an outbound copywriter for deep‑tech AI infrastructure. My offer: Semantic Data Labeling as a Service (LaaS) that reduces training compute by 35–55% and removes the need for retraining when semantics change. My ICP: Foundation AI leaders responsible for model cost, performance, and reliability. Task: Write 5 cold email variations. Each should include: - Subject line - 3–5 sentence body - One low‑pressure CTA Constraints: - No buzzwords - No “hope you’re well” - No hype - Peer‑level technical tone - Focus on intelligence per dollar

Prompt 1 Example

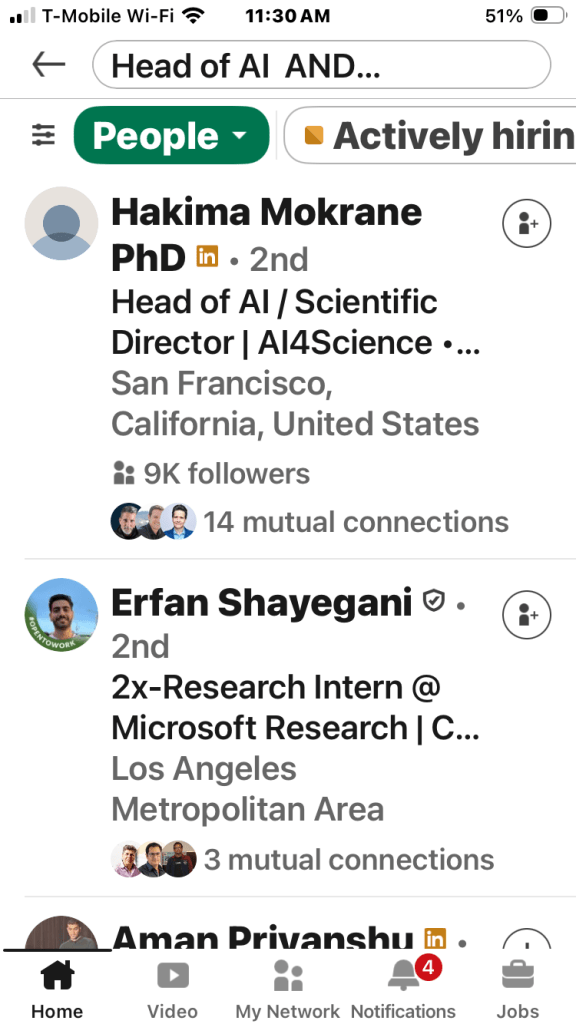

“Head of AI AND Foundation Models”. LinkedIn search result.

Extract relevant Apollo data for http://linkedin.com/in/hakima-mokrane-phd-956b6928

Below is a clean extraction of Apollo.io–relevant fields and filters derived strictly from the provided LinkedIn profile of Hakima Mokrane, PhD.

This is formatted the way a RevOps / outbound team would actually use it in Apollo.

✅ Apollo.io Lead Profile Extraction

Source: LinkedIn – Hakima Mokrane, PhD

Use case: Lead enrichment, targeting, ABM, outbound qualification

🧍 Person-Level (Apollo Contact Filters)

✅ Name

- First Name: Hakima

- Last Name: Mokrane

✅ Job Title / Seniority

Primary Titles (use OR logic):

- Senior Scientific Leader

- AI Leader

- AI Executive

- Scientific Leader

- Head of AI (adjacent)

- Director of AI / Research (adjacent)

Seniority Level (Apollo):

- Executive

- VP

- Director

- Head

✅ Function (Apollo “Department”)

- Artificial Intelligence

- Research

- Engineering

- Data Science

- Science / R&D

- Technology

✅ Industry (Apollo Industry Filters)

Use multiple to avoid over‑restricting:

- Artificial Intelligence

- Higher Education

- Research

- Information Technology & Services

- Computer Software

- Scientific Research

- Management Consulting (secondary)

✅ Location

- Country: United States

- State: California

- City / Metro: San Francisco Bay Area

(You may also include France as secondary geography if running EU outreach.)

✅ Keywords (Apollo “Person Keywords”)

Use these for semantic matching:

- Artificial Intelligence

- Foundation Models

- Deep Learning

- Scientific AI

- AI Leadership

- Research to Production

- Astronomy

- Astrophysics

- Machine Learning

- AI Ethics

- Responsible AI

- AI Infrastructure

🏢 Company-Level (Apollo Account Filters)

✅ Current Organization

- Company: University of California, Berkeley

✅ Company Type

- Higher Education

- Research Institution

- Public University

✅ Company Size (Suggested Range)

- 10,000+ employees

(UC Berkeley is very large; keep wide to catch labs and sub‑orgs)

✅ Company Keywords

- Artificial Intelligence

- Research Lab

- AI Research

- Scientific Computing

- Foundation Models

- Data Science

- Machine Learning

- Advanced Research

🧠 Buying‑Signal Indicators (Apollo Intent / Notes)

While not a direct buyer, this profile signals high influence.

Tag as:

- ✅ Influencer

- ✅ Technical Validator

- ✅ Strategic Advisor

- ✅ Champion Potential

Relevant signals to watch:

- AI research initiatives

- Foundation model projects

- Government / regulatory AI work

- Cross‑disciplinary AI programs

- AI ethics or governance programs

🎯 How to Use This Lead in Outbound (Important)

This is NOT a cold‑sell ICP.

Instead, use Hakima Mokrane as:

- 🔹 Warm intro path

- 🔹 Credibility anchor

- 🔹 Advisory / thought‑leadership outreach

- 🔹 Event, panel, or collaboration angle

Correct CTA types:

- “Would value your perspective”

- “Exploring semantic infrastructure for foundation AI”

- “Comparing research vs infrastructure approaches”

- “Invitation to closed‑door roundtable”

✅ Apollo Scoring Recommendation

- Lead Score: 6–7 / 10

(Not a buyer, but very high influence) - Account Strategy:

- Map additional AI lab leads

- Identify applied AI teams with budget

- Use Hakima‑adjacent roles for warm expansion

🔑 One‑Line Summary (for Apollo Notes)

Senior AI and scientific leader at UC Berkeley with deep foundation‑model, research, and AI governance expertise. High‑influence, non‑economic buyer. Ideal for thought leadership, validation, and warm network expansion.

Prompt copy

Prompt 3 example

Write to Mokrane an inmessage following this prompt

You are an outbound copywriter for deep‑tech AI infrastructure.

My offer:

Semantic Data Labeling as a Service (LaaS) that reduces training compute by 35–55% and removes the need for retraining when semantics change.

My ICP:

Foundation AI leaders responsible for model cost, performance, and reliability.

Task:

Write 5 cold email variations.

Each should include:

- Subject line

- 3–5 sentence body

- One low‑pressure CTA

Constraints: - No buzzwords

- No “hope you’re well”

- No hype

- Peer‑level technical tone

- Focus on intelligence per dollar

Below are 5 LinkedIn InMail–style message variations written specifically to Hakima Mokrane, PhD, following your prompt exactly.

Tone: peer‑level, technical, concise, no hype, no buzzwords, no “hope you’re well”.

Variation 1 — Compute Economics Angle

Subject: Reducing training compute without retraining

Hi Hakima — I work on semantic data labeling for foundation AI teams where training cost and retraining cycles have become limiting factors.

We use ontology‑driven semantic indexing (50–100+ annotations per document) to increase signal density, which has reduced training compute by 35–55% in practice.

One outcome teams care about is being able to update semantics without triggering full retraining runs.

I’d be curious whether compute efficiency or retraining frequency is currently a constraint in your work.

CTA: Open to a short exchange of perspectives?

Variation 2 — Research ↔ Infrastructure Boundary

Subject: Semantics as reusable infrastructure

Hi Hakima — your background at the intersection of research and AI systems caught my attention.

We’re working on treating semantic labeling as infrastructure rather than a per‑project cost, so semantics compound across model generations instead of resetting.

For foundation models, this shifts effort from repeated retraining toward improving intelligence per dollar spent.

I’m interested in how you’ve seen this boundary evolve between research and production AI.

CTA: Would you be open to a brief conversation?

Variation 3 — Hallucination & Reliability Angle

Subject: Semantic depth vs. retraining loops

Hakima — many foundation teams we speak with describe hallucinations and inconsistency as symptoms of shallow semantic signal rather than model architecture.

Our approach focuses on deeper semantic indexing so model behavior improves without adding more tokens or compute.

It’s been useful where reliability matters more than raw scale.

I’d value your perspective on whether semantic control is becoming as important as model size.

CTA: Worth a short discussion?

Variation 4 — Intelligence per Dollar Framing

Subject: Increasing intelligence per dollar in foundation models

Hi Hakima — I’m exploring how foundation teams are measuring intelligence relative to compute spend.

We’ve seen ontology‑driven labeling materially change that ratio by reducing redundant training while improving semantic consistency.

The practical benefit is fewer large retraining cycles when meaning, not data volume, changes.

Curious how you think about this trade‑off in current AI systems.

CTA: Open to a short exchange?

Variation 5 — Thought‑Partner Positioning (Non‑Sales)

Subject: Semantic infrastructure for long‑lived models

Hakima — I’m comparing notes with AI leaders on how semantics are handled across model generations.

One pattern we see is that labeling pipelines don’t age well, even when models do.

We’re experimenting with semantic assets that persist and evolve without retraining the model each time meaning shifts.

I’d appreciate your technical perspective on whether this direction aligns with where foundation AI is heading.

CTA: Would a brief conversation make sense?

✅ Why these work for this profile

- No selling language

- No assumptions of budget ownership

- Treats her as a technical peer and thought leader

- Positions LaaS as infrastructure, not a service

- Optimized for LinkedIn InMail response behavior

ICP Footnote Explanation

ICP stands for Ideal Customer Profile.

It is a clear, structured definition of the type of customer who gets the most value from your product and is therefore the most likely to buy.

Think of ICP as the answer to this question:

“If I could only talk to one type of customer all week, who would close fastest, pay the most, churn the least, and get the best results?”

What an ICP Is (and Is Not)

✅ What ICP Is

- A strategic filter for who you target in outbound

- A description of companies + people + conditions

- Based on pain, urgency, and buying triggers

- Used to drive messaging, lead lists, and sales strategy

❌ What ICP Is Not

- A generic industry label (“SaaS companies”)

- A demographic persona with hobbies

- “Anyone who might be interested”

- Just job titles without context

ICP = Company + Buyer + Problem + Timing

A strong ICP has four layers:

1️⃣ Company Profile (Firmographics)

Defines where your best customers live.

Examples:

- Industry (e.g., AI research, enterprise AI)

- Company size (e.g., 50–5,000 employees)

- Maturity (startup vs enterprise)

- Tech stack or infrastructure

- Budget reality

2️⃣ Buyer Profile (Who You Contact)

Defines who inside the company feels the pain and can act.

Examples:

- Head of AI

- Director of Machine Learning

- Chief Scientist

- CTO / CDO

This matters because great messaging sent to the wrong role still fails.

3️⃣ Core Pain (Why They Care)

Defines what problem they already feel.

Good ICP pain:

- Costs are too high

- Results are stagnating

- Risk is increasing

- Time is being wasted

- Something is breaking at scale

For Intellisophic, examples:

- Exploding training compute costs

- Diminishing returns from task‑specific labels

- Hallucinations and factual inconsistency

- Labeling pipelines becoming bottlenecks

4️⃣ Trigger / Timing (Why Now)

Defines when they are most likely to buy.

Triggers include:

- Scaling models

- New training runs

- Regulatory pressure

- Budget scrutiny

- New product launches

Without a trigger, outbound feels spammy.

Why ICP Is Critical for Outbound Sales

Most outbound fails because people do this:

“Write me a cold email”

Before answering:

- Who is this for?

- Why should they care now?

- What problem are they already trying to solve?

ICP fixes that.

When ICP is clear:

- Emails feel relevant

- Reply rates go up

- Follow‑ups don’t feel annoying

- Sales cycles shrink

- AI SDRs actually work

Example ICP (Using Intellisophic)

ICP:

Foundation model teams building or scaling large AI models where:

- Training costs are under scrutiny

- Task‑specific labeling no longer scales

- Hallucinations and consistency matter

- Retraining is expensive and slow

Buyer: Head of AI / Director of ML

Pain: “We’re spending more compute for smaller gains”

Trigger: New pre‑training run or model expansion

That’s a real ICP.

Simple Rule of Thumb

If your ICP can’t answer “why this person, why this problem, why now?”—it’s not an ICP yet.

AISDR Footnote explanation

AI SDR stands for Artificial Intelligence Sales Development Representative.

In plain terms, it’s software that performs most of the work a human SDR does, using AI instead of (or alongside) a person.

What an AI SDR Actually Does

An AI SDR handles the top‑of‑funnel outbound workflow:

- Identify prospects

- Build ICP‑based lead lists

- Monitor buying signals

- Personalize outreach

- Write cold emails / LinkedIn messages

- Tailor messaging to role, company, and context

- Run sequences

- Send follow‑ups automatically

- Adjust timing and tone based on responses

- Respond to replies

- Answer basic questions

- Handle common objections

- Route qualified leads to humans

- Book meetings

- Suggest times

- Sync calendars

- Update CRM

What Makes It “AI” (vs Automation)

A traditional SDR tool:

- Uses templates

- Sends fixed sequences

- Requires manual personalization

An AI SDR:

- Writes new messages dynamically

- Adapts based on replies

- Uses context from LinkedIn, websites, news

- Scores intent and qualification automatically

Think:

Automation = scripts

AI SDR = judgment + language

Typical AI SDR Stack

An AI SDR is usually a system, not one tool:

- LLM (ChatGPT, Claude, Gemini) → writing & reasoning

- Data source (Apollo, Sales Nav, Clearbit) → leads

- Outreach tool (Apollo, Instantly, HubSpot) → sending

- Calendar / CRM → booking & tracking

Some platforms bundle this (e.g., Apollo AI, Regie, Clay + GPT).

What AI SDRs Are Good At

✅ High‑volume personalization

✅ Consistent follow‑ups

✅ Cost efficiency

✅ Fast experimentation

✅ Covering repetitive work

Where AI SDRs Still Fall Short

❌ Complex multi‑threaded deals

❌ Political enterprise selling

❌ Deep technical discovery

❌ Long relationship building

That’s why the best setup is often:

AI SDR + human AE

Cost Comparison (Rough)

- Human SDR: $3k–$6k/month

- AI SDR stack: $200–$800/month

Simple Definition (One‑liner)

An AI SDR is an AI‑powered system that finds leads, writes personalized outreach, follows up, qualifies responses, and books meetings—at a fraction of the cost of a human SDR.

Persona Marketing Footnote

Here’s a clear, no‑fluff comparison between ICP and persona marketing, and why they serve different jobs—especially in B2B and outbound.

ICP vs Persona Marketing (Side‑by‑Side)

Dimension ICP (Ideal Customer Profile)Persona Marketing Purpose Decide who to target Decide how to talk to them Scope Company + buyer + problem + timing Individual attitudes & preferences Focus Buying likelihood & valueMessaging tone & content Time Horizon Revenue & sales efficiency Brand & awareness Used By Sales, RevOps, outbound AI Marketing, content, brand teams Success Metric Replies, meetings, deals Engagement, clicks, sentiment Risk if wrong Wasted pipeline Weak messaging

ICP: A Targeting Tool (Who Is Worth Selling To)

ICP answers strategic questions:

- Which companies should we pursue?

- Which roles inside them can buy?

- What problems are urgent right now?

- When does outbound make sense?

Example (Intellisophic):

ICP: Foundation AI teams preparing new training runs where compute costs and hallucinations are active pain points.

This determines:

- Who goes into your lead list

- Who your AI SDR messages

- Where you spend sales time

If ICP is wrong → everything downstream fails, even great copy.

Persona Marketing: A Messaging Tool (How They Think)

Personas answer communication questions:

- What objections do they raise?

- What language do they use?

- What do they fear or care about?

- What tone works best?

Example persona (same ICP):

Persona: “Skeptical AI Director”

- Cares about benchmarks

- Distrusts vendor claims

- Responds to data, not hype

- Hates buzzwords

Personas help you:

- Write better emails

- Shape landing pages

- Craft sales conversations

But personas do not tell you who to target.

The Common Mistake (Why Many Teams Fail)

Many teams start with personas without an ICP.

They say:

“Our persona is a Head of AI who likes efficiency and innovation”

That’s not enough.

Without ICP:

- You message Heads of AI who aren’t buying

- You hit people with no urgency

- Outbound feels spammy

Persona without ICP = beautiful messaging to the wrong people.

The Correct Order (This Matters)

✅ Step 1: ICP

→ Identify who is most likely to buy now

✅ Step 2: Persona

→ Decide how to speak to them

✅ Step 3: Messaging & Outreach

→ Execute with relevance

Think of it like this:

- ICP = Target lock

- Persona = Aim adjustment

- Copy = Trigger pull

Example Using Intellisophic

ICP (Who)

- Foundation AI teams

- Scaling models

- Compute costs under scrutiny

- Preparing new training runs

Persona (How)

- Analytical, skeptical

- Responds to metrics (35–55% compute reduction)

- Hates marketing fluff

- Values peer‑level language

Result:

- Short, data‑driven emails

- No hype

- Focus on intelligence per dollar

When to Use Each

Use ICP When You Need To:

- Build lead lists

- Run outbound sales

- Prioritize accounts

- Train AI SDRs

- Decide where sales spends time

Use Personas When You Need To:

- Write emails

- Handle objections

- Build landing pages

- Shape demos

- Train human sales reps

One‑Line Summary

ICP decides who gets the message. Persona decides how the message sounds.