The Surfable Books Project as Primary Evidence (2001–2003)

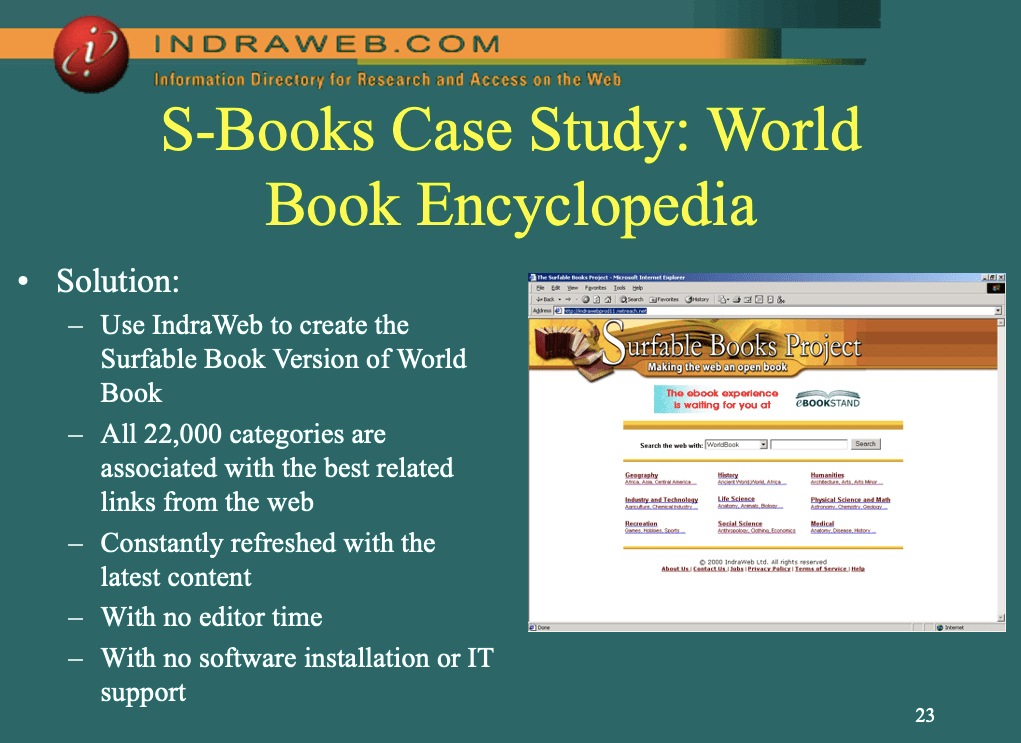

The archived Surfable Books Project website demonstrates that S‑Books were not an experimental prototype, but a fully operational semantic knowledge system. Users could search across all Surfable Books rather than individual titles, navigating knowledge by meaning instead of pages.

- Search across multiple encyclopedias and reference works

- Navigation by knowledge domains rather than documents

- Integrated titles such as World Book Encyclopedia 2000 and World Book Medical Encyclopedia

The titles were licensed from leading publishers including Brittanica, Elsevier, Wiley, covering millions of topics today.

Disciplines such as Geography, Life Science, Industry & Technology, Physical Science & Math, Humanities, and Medicine were first‑class navigational elements. The primary unit of interaction was conceptual meaning, not text location.

What S‑Books Actually Were (In Modern Terms)

Viewed through a 2026 lens, SurfableBooks functioned as a semantic AI system rather than an early e‑reader.

- A domain‑grounded knowledge graph

- A concept‑based retrieval system

- A semantic interface over unstructured text

S‑Books explicitly modeled meaning using taxonomies and ontologies, while modern LLMs rely on implicit statistical embeddings.

| S‑Books (2001) | Generative AI (2020s) |

|---|---|

| Explicit taxonomies | Implicit embeddings |

| Conceptual navigation | Token prediction |

| Domain closure | Open‑ended hallucination |

| Deterministic retrieval | Probabilistic generation |

The Direct Line: S‑Books → IndraWeb → MOSAEC

SurfableBooks were the civilian‑facing counterpart to IndraWeb, the semantic AI system that powered the U.S. counterintelligence platform MOSAEC following 9/11.

All three systems shared the same architectural foundation:

- Large‑scale ontologies and taxonomies

- Concept Query Language (CQL)

- Orthogonal Corpus Indexing

- Concept‑based search rather than keyword search

The difference was not capability, but risk tolerance. SurfableBooks targeted education and publishing; IndraWeb and MOSAEC targeted national security, WMD detection, and counterterrorism.

Why This Matters for AGI

The SurfableBooks interface exposes a fundamental limitation of modern generative AI systems: LLMs know language, but they do not know domains.

S‑Books could traverse concepts such as The Human Body, move between Physics and Chemistry, or explore medical knowledge from Abdomen to Zygote because those concepts were explicitly defined and bounded.

LLMs, by contrast:

- Predict plausible text rather than validate meaning

- Cannot guarantee domain completeness

- Cannot reliably detect false premises

- Are vulnerable to data poisoning and semantic drift

The AGI Stall: Scaling Without Semantics

The AI industry assumed that scale could replace meaning. SurfableBooks prove the opposite.

Intelligence emerges from structured knowledge, not from statistical fluency alone. Without semantic grounding, LLMs lack a persistent world model and cannot accumulate true understanding.

Reframing the “Failure” of S‑Books

S‑Books did not fail technologically. They were early, expensive, and culturally misaligned with a web optimized for clicks rather than cognition.

The same tradeoffs now define the ceiling of generative AI.

The Lesson for 2026

S‑Books demonstrate that the missing ingredient in AGI is not more language, but more meaning.

The future of AI is not LLMs replacing semantic systems, but LLMs grounded by them. SurfableBooks were an early, working example of that grounding—long before deep learning, GPUs, or consumer AI hype.

Final Synthesis

- SurfableBooks proved semantic navigation at scale

- IndraWeb proved semantic intelligence under pressure

- MOSAEC proved semantic AI in national security

- LLMs prove that language alone is not intelligence

AGI will not arrive by scaling text prediction. It will arrive by reconnecting to the semantic foundations already laid by systems like S‑Books.